Our paper 'Tri-MipRF: Tri-Mip Representation for Efficient Anti-Aliasing Neural Radiance Fields' accepted by ICCV 2023 as Oral Presentation.

Our paper 'Tri-MipRF: Tri-Mip Representation for Efficient Anti-Aliasing Neural Radiance Fields' accepted by ICCV 2023 as Oral Presentation.

Tri-MipRF: Tri-Mip Representation for Efficient Anti-Aliasing Neural Radiance Fields

Despite the tremendous progress in neural radiance fields (NeRF), we still face a dilemma of the trade-off between quality and efficiency, e.g., MipNeRF presents fine-detailed and anti-aliased renderings but takes days for training, while Instant-ngp can accomplish the reconstruction in a few minutes but suffers from blurring or aliasing when rendering at various distances or resolutions due to ignoring the sampling area.

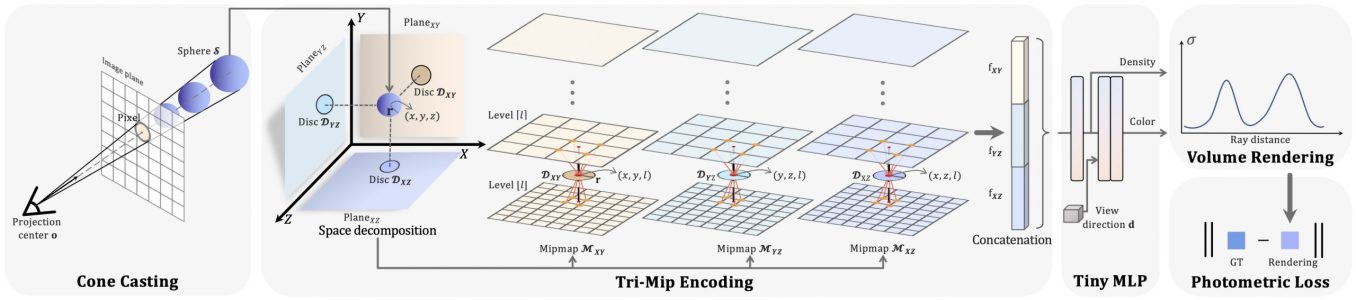

To this end, we propose a novel Tri-Mip encoding (à la “mipmap”) that enables both instant reconstruction and anti-aliased high-fidelity rendering for neural radiance fields. The key is to factorize the pre-filtered 3D feature spaces in three orthogonal mipmaps. In this way, we can efficiently perform 3D area sampling by taking advantage of 2D pre-filtered feature maps, which significantly elevates the rendering quality without sacrificing efficiency. To cope with the novel Tri-Mip representation, we propose a cone-casting rendering technique to efficiently sample anti-aliased 3D features with the Tri-Mip encoding considering both pixel imaging and observing distance.

Extensive experiments on both synthetic and real-world datasets demonstrate our method achieves state-of-the-art rendering quality and reconstruction speed while maintaining a compact representation that reduces 25% model size compared against Instant-ngp.

PDF: https://arxiv.org/abs/2307.11335

Code: https://github.com/wbhu/Tri-MipRF