Our paper 'TransMVSNet: Global Context-aware Multi-view Stereo Network with Transformers' accepted by CVPR 2022.

Our paper 'TransMVSNet: Global Context-aware Multi-view Stereo Network with Transformers' accepted by CVPR 2022.

TransMVSNet: Global Context-aware Multi-view Stereo Network with Transformers

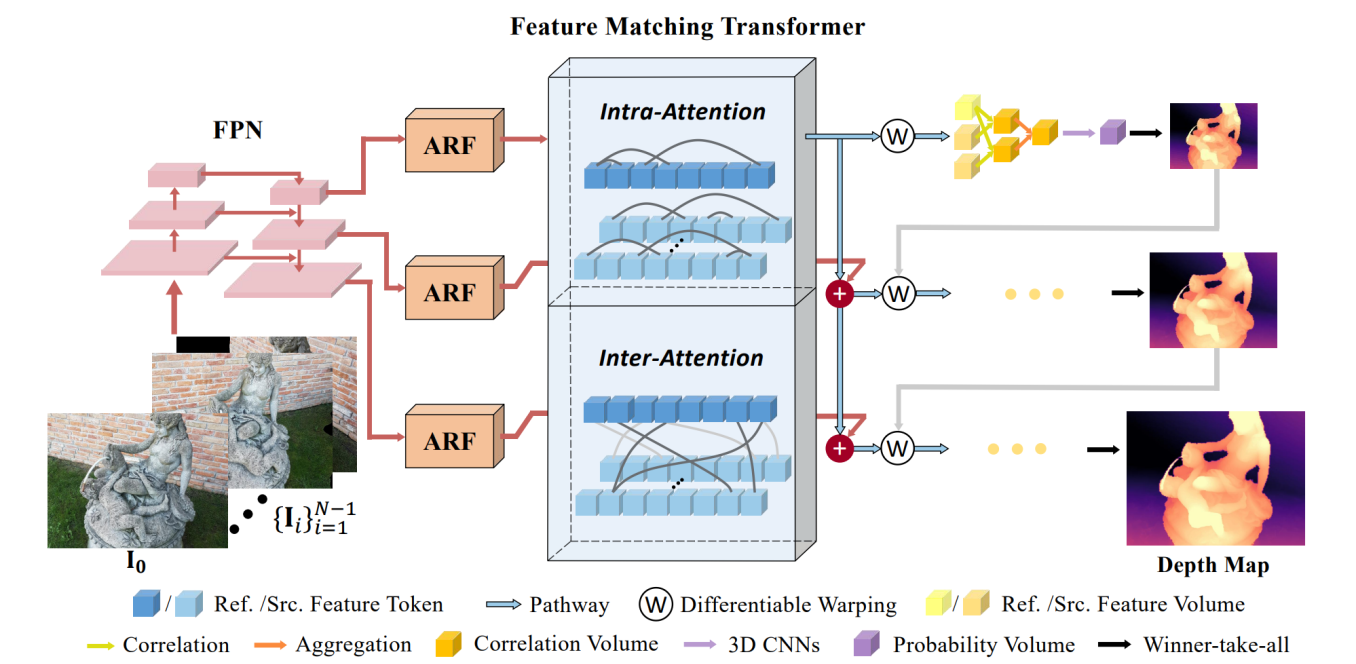

In this paper, we present TransMVSNet, based on our exploration of feature matching in multi-view stereo (MVS). We analogize MVS back to its nature of a feature matching task and therefore propose a powerful Feature Matching Transformer (FMT) to leverage intra- (self-) and inter- (cross-) attention to aggregate long-range context information within and across images. To facilitate a better adaptation of the FMT, we leverage an Adaptive Receptive Field (ARF) module to ensure a smooth transit in scopes of features and bridge different stages with a feature pathway to pass transformed features and gradients across different scales. In addition, we apply pair-wise feature correlation to measure similarity between features, and adopt ambiguity-reducing focal loss to strengthen the supervision. To the best of our knowledge, TransMVSNet is the first attempt to leverage Transformer into the task of MVS. As a result, our method achieves state-of-the-art performance on DTU dataset, Tanks and Temples benchmark, and BlendedMVS dataset. The code of our method will be made available at this https URL .

PDF: https://arxiv.org/pdf/2104.14205

Code: https://github.com/MegviiRobot/TransMVSNet