Keras 转换成 Tensorflow 模型格式并使用

Tensorflow 官方已经集成了 Keras 作为自己推荐的 High-Level API,Keras 的确使用非常方便,而且代码美观简洁,不像 Tensorflow 那样有很多形式化的代码。对于我们进行快速原型和实验是非常有帮助的。然而在一些场合我们可能需要混合使用 Keras 和 Tensorflow 定义模型或者保存模型的操作,这时就需要一些转换了。

系统环境

Ubuntu 16.04

Tensorflow 1.10.1 (内置:Keras 2.1.6-tf)

1、Fashion MNIST 数据集

1)数据简介

Fashion-MNIST [1] 是一个替代MNIST手写数字集的图像数据集。 它是由Zalando(一家德国的时尚科技公司)旗下的研究部门提供。其涵盖了来自10种类别的共7万个不同商品的正面图片。Fashion-MNIST的大小、格式和训练集/测试集划分与原始的MNIST完全一致。60000/10000的训练测试数据划分,28x28的灰度图片。你可以直接用它来测试你的机器学习和深度学习算法性能,且不需要改动任何的代码 [11]。

典型的 Fashion-MNITST 数据是这样的,其中每三行表示一个类别:

![]()

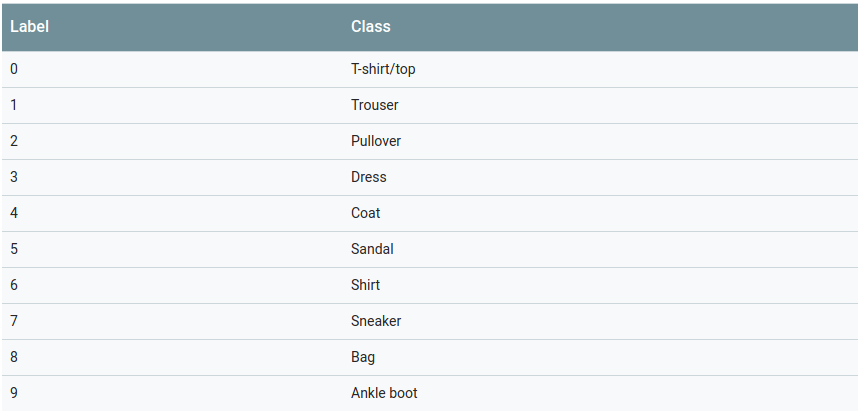

Fashion-MNIST 与 MNIST 同样有 10 个类别,不过并不是 0-9 的 10 个数字,它的类别如下:

我们使用过以前的 MNIST 数据集都知道,随便弄个很简单的网络,就可以轻轻松松刷出 99% 以上的分数了,即使传统方法也很容易达到高分。所以 MNIST 手写数字识别由于过于简单,作为一个基本的实验数据已经没有什么意义了。Tensorflow 和很多深度学习框架现在的入门数据也都推荐 Fashion MNIST。

2)数据读取

其实 Keras 为我们提供了简单的接口可以一键下载 Fashion-MNIST 数据并且读取:

1 2 | from keras.datasets import fashion_mnist (x_train, y_train), (x_test, y_test) = fashion_mnist.load_data() |

不过由于天朝网络的原因,我并不推荐这种方式,建议直接下载到本地读取。我这里将数据直接存到 data/fashion 目录下。

百度网盘下载:

https://pan.baidu.com/s/19zZqU5tSwZyY780z8Y8_VA

读取数据代码如下(保存为:utils/mnist_reader.py):

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 | def load_mnist(path, kind='train'): import os import gzip import numpy as np """Load MNIST data from `path`""" labels_path = os.path.join(path, '%s-labels-idx1-ubyte.gz' % kind) images_path = os.path.join(path, '%s-images-idx3-ubyte.gz' % kind) with gzip.open(labels_path, 'rb') as lbpath: labels = np.frombuffer(lbpath.read(), dtype=np.uint8, offset=8) with gzip.open(images_path, 'rb') as imgpath: images = np.frombuffer(imgpath.read(), dtype=np.uint8, offset=16).reshape(len(labels), 784) return images, labels |

2、使用 Keras 定义 CNN 模型并保存

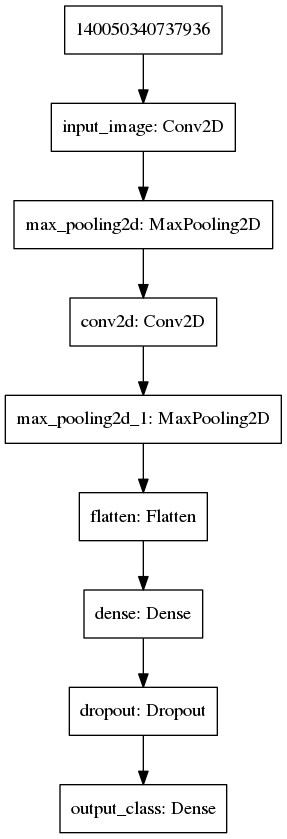

下面的代码中我们定义了一个非常简单的 CNN 网络,结构图如下:

我们使用这一网络进行训练并且保存为 Keras 标准的 h5 格式。这一部分代码比较基础,就不做过多解释了。

Keras 模型定义和训练代码如下(保存为:train.py):

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 | # TensorFlow and tf.keras import os import gzip import numpy as np import tensorflow as tf from tensorflow import keras from keras.models import Sequential from keras.layers import Dense, Dropout, Flatten from keras.layers import Conv2D, MaxPooling2D from keras.optimizers import SGD from keras import backend as K # Use this only for export of the model. K.set_learning_phase(0) K.set_image_data_format('channels_last') sess = K.get_session() # Helper libraries import numpy as np # Plot model from keras.utils import plot_model # Dataset import utils.mnist_reader as mnist_reader print(tf.__version__) print(keras.__version__) train_images, train_labels = mnist_reader.load_mnist('data/fashion', kind='train') test_images, test_labels = mnist_reader.load_mnist('data/fashion', kind='t10k') class_names = ['T-shirt/top', 'Trouser', 'Pullover', 'Dress', 'Coat', 'Sandal', 'Shirt', 'Sneaker', 'Bag', 'Ankle boot'] train_images = train_images.reshape(train_images.shape[0], 28, 28, 1) test_images = test_images.reshape(test_images.shape[0], 28, 28, 1) train_images = train_images.astype('float32') test_images = test_images.astype('float32') train_images /= 255 test_images /= 255 # convert class vectors to binary class matrices train_labels = tf.keras.utils.to_categorical(train_labels, 10) test_labels = tf.keras.utils.to_categorical(test_labels, 10) model = tf.keras.Sequential() # Must define the input shape in the first layer of the neural network model.add(tf.keras.layers.Conv2D(filters=64, kernel_size=2, padding='same', activation='relu', input_shape=(28, 28, 1), name='input_image')) model.add(tf.keras.layers.MaxPooling2D(pool_size=2)) model.add(tf.keras.layers.Conv2D(filters=32, kernel_size=2, padding='same', activation='relu')) model.add(tf.keras.layers.MaxPooling2D(pool_size=2)) model.add(tf.keras.layers.Flatten()) model.add(tf.keras.layers.Dense(256, activation='relu')) model.add(tf.keras.layers.Dropout(0.5)) model.add(tf.keras.layers.Dense(10, activation='softmax', name='output_class')) # Take a look at the model summary model.summary() # Virtualize model from keras.utils import plot_model plot_model(model, to_file='model.png') # Include the epoch in the file name. (uses `str.format`) checkpoint_path="train_logs/cp-{epoch:04d}.hdf5" cp_callback = tf.keras.callbacks.ModelCheckpoint( checkpoint_path, verbose=1, save_weights_only=False, # Save weights, every 5-epochs. period=5) # Comile model with loss and optimizer model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy']) # Train model model.fit(train_images, train_labels, batch_size=64, callbacks = [cp_callback], epochs=10) model.evaluate(test_images, test_labels) # Evaluate the model on test set score = model.evaluate(test_images, test_labels, verbose=0) print("%s: %.2f%%" % (model.metrics_names[1], score[1]*100)) # Predict using Keras predictions = model.predict(test_images) pred_index = np.argmax(predictions[0]) # Print test accuracy print('Predict:', pred_index, ' Label:', class_names[pred_index], 'GT:', test_labels[0]) # Save whole graph & weights model_path = "models/fashion_mnist.h5" model.save(model_path) print('Finish writing model to : {}'.format(model_path)) print('You can convert model to tensorflow format:\npython3 utils/keras_to_tensorflow.py -input_model_file {} -output_model_file {}'.format(model_path, model_path + ".pb")) |

如果你的运行没有问题则会看到类似如下输出:

1 2 3 4 5 6 7 | Epoch 00010: saving model to train_logs/cp-0010.hdf5 10000/10000 [==============================] - 0s 44us/step acc: 91.95% Predict: 9 Label: Ankle boot GT: [0. 0. 0. 0. 0. 0. 0. 0. 0. 1.] Finish writing model to : models/fashion_mnist.h5 You can convert model to tensorflow format: python3 utils/keras_to_tensorflow.py -input_model_file models/fashion_mnist.h5 -output_model_file models/fashion_mnist.h5.pb |

同时在 models/ 文件夹下保存了 fashion_mnist.h5 文件,这一文件包含了模型的结构和参数。

3、转换 Keras 模型到 Tensorflow 格式并保存

这一环节我们使用 keras_to_tensorflow [2] 转换工具进行模型转换,其实这个工具原理很简单,首先用 Keras 读取 .h5 模型文件,然后用 tensorflow 的 convert_variables_to_constants 函数将所有变量转换成常量,最后再 write_graph 就是一个包含了网络以及参数值的 .pb 文件了。

具体代码参见(原始代码中可以传入输出 node 数量和名字并使用 identity 生成新的 tensor,我这里稍作修改,直接读取 Keras 的 outputs 的操作名,最后会输出原始 inputs 和 outputs 的名字供后面使用):

Tensorflow 模型转换代码(保存为:utils/keras_to_tensorflow.py)

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 | # coding: utf-8 # In[ ]: """ Copyright (c) 2017, by the Authors: Amir H. Abdi This software is freely available under the MIT Public License. Please see the License file in the root for details. The following code snippet will convert the keras model file, which is saved using model.save('kerasmodel_weight_file'), to the freezed .pb tensorflow weight file which holds both the network architecture and its associated weights. """; # In[ ]: ''' Input arguments: num_output: this value has nothing to do with the number of classes, batch_size, etc., and it is mostly equal to 1. If the network is a **multi-stream network** (forked network with multiple outputs), set the value to the number of outputs. quantize: if set to True, use the quantize feature of Tensorflow (https://www.tensorflow.org/performance/quantization) [default: False] use_theano: Thaeno and Tensorflow implement convolution in different ways. When using Keras with Theano backend, the order is set to 'channels_first'. This feature is not fully tested, and doesn't work with quantizization [default: False] input_fld: directory holding the keras weights file [default: .] output_fld: destination directory to save the tensorflow files [default: .] input_model_file: name of the input weight file [default: 'model.h5'] output_model_file: name of the output weight file [default: args.input_model_file + '.pb'] graph_def: if set to True, will write the graph definition as an ascii file [default: False] output_graphdef_file: if graph_def is set to True, the file name of the graph definition [default: model.ascii] output_node_prefix: the prefix to use for output nodes. [default: output_node] ''' # Parse input arguments # In[ ]: import argparse parser = argparse.ArgumentParser(description='set input arguments') parser.add_argument('-input_fld', action="store", dest='input_fld', type=str, default='.') parser.add_argument('-output_fld', action="store", dest='output_fld', type=str, default='') parser.add_argument('-input_model_file', action="store", dest='input_model_file', type=str, default='model.h5') parser.add_argument('-output_model_file', action="store", dest='output_model_file', type=str, default='') parser.add_argument('-output_graphdef_file', action="store", dest='output_graphdef_file', type=str, default='model.ascii') parser.add_argument('-num_outputs', action="store", dest='num_outputs', type=int, default=1) parser.add_argument('-graph_def', action="store", dest='graph_def', type=bool, default=False) parser.add_argument('-output_node_prefix', action="store", dest='output_node_prefix', type=str, default='output_node') parser.add_argument('-quantize', action="store", dest='quantize', type=bool, default=False) parser.add_argument('-theano_backend', action="store", dest='theano_backend', type=bool, default=False) parser.add_argument('-f') args = parser.parse_args() parser.print_help() print('input args: ', args) if args.theano_backend is True and args.quantize is True: raise ValueError("Quantize feature does not work with theano backend.") # initialize # In[ ]: from keras.models import load_model import tensorflow as tf from pathlib import Path from keras import backend as K output_fld = args.input_fld if args.output_fld == '' else args.output_fld if args.output_model_file == '': args.output_model_file = str(Path(args.input_model_file).name) + '.pb' Path(output_fld).mkdir(parents=True, exist_ok=True) weight_file_path = str(Path(args.input_fld) / args.input_model_file) # Load keras model and rename output # In[ ]: K.set_learning_phase(0) if args.theano_backend: K.set_image_data_format('channels_first') else: K.set_image_data_format('channels_last') try: net_model = load_model(weight_file_path) except ValueError as err: print('''Input file specified ({}) only holds the weights, and not the model defenition. Save the model using mode.save(filename.h5) which will contain the network architecture as well as its weights. If the model is saved using model.save_weights(filename.h5), the model architecture is expected to be saved separately in a json format and loaded prior to loading the weights. Check the keras documentation for more details (https://keras.io/getting-started/faq/)''' .format(weight_file_path)) raise err # num_output = args.num_outputs # pred = [None]*num_output # pred_node_names = [None]*num_output # for i in range(num_output): # pred_node_names[i] = args.output_node_prefix+str(i) # pred[i] = tf.identity(net_model.outputs[i], name=pred_node_names[i]) # num_output = len(net_model.output_names) # pred_node_names = [None]*num_output # pred = [None]*num_output # # pred_node_names = net_model.output_names # for i in range(num_output): # pred_node_names[i] = args.output_node_prefix+str(i) # pred[i] = tf.identity(net_model.outputs[i], name=pred_node_names[i]) input_node_names = [node.op.name for node in net_model.inputs] print('Input nodes names are: ', input_node_names) pred_node_names = [node.op.name for node in net_model.outputs] print('Output nodes names are: ', pred_node_names) # print("net_model.input.op.name:", net_model.input.op.name) # print("net_model.output.op.name:", net_model.output.op.name) # print("net_model.input_names:", net_model.input_names) # print("net_model.output_names:", net_model.output_names) # [optional] write graph definition in ascii # In[ ]: sess = K.get_session() if args.graph_def: f = args.output_graphdef_file tf.train.write_graph(sess.graph.as_graph_def(), output_fld, f, as_text=True) print('saved the graph definition in ascii format at: ', str(Path(output_fld) / f)) # convert variables to constants and save # In[ ]: from tensorflow.python.framework import graph_util from tensorflow.python.framework import graph_io if args.quantize: from tensorflow.tools.graph_transforms import TransformGraph transforms = ["quantize_weights", "quantize_nodes"] transformed_graph_def = TransformGraph(sess.graph.as_graph_def(), [], pred_node_names, transforms) constant_graph = graph_util.convert_variables_to_constants(sess, transformed_graph_def, pred_node_names) else: constant_graph = graph_util.convert_variables_to_constants(sess, sess.graph.as_graph_def(), pred_node_names) graph_io.write_graph(constant_graph, output_fld, args.output_model_file, as_text=False) print('saved the freezed graph (ready for inference) at: ', str(Path(output_fld) / args.output_model_file)) |

我们执行如下命令转换 Keras 模型到 Tensorflow 的 pb 格式:

1 | python3 utils/keras_to_tensorflow.py -input_model_file models/fashion_mnist.h5 -output_model_file models/fashion_mnist.h5.pb |

如果你的运行无误的话则会显示如下信息并生成 models/fashion_mnist.h5.pb 这个就是转换过来的 Tensorflow 格式:

1 2 3 | Input nodes names are: ['input_image_input'] Output nodes names are: ['output_class/Softmax'] saved the freezed graph (ready for inference) at: models/fashion_mnist.h5.pb |

这里面也告知了你模型输入和输出的 Tensor 名字,这两个信息很重要我们后面会用到。

4、使用 Tensorflow 加载模型并预测

我们使用标准的 Tensorflow Low-Level API 加载和预测代码如下(保存为:load_predict.py)

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 | #!/usr/bin/env python import tensorflow as tf import numpy as np import numpy as np from tensorflow.python.platform import gfile # Dataset import utils.mnist_reader as mnist_reader train_images, train_labels = mnist_reader.load_mnist('data/fashion', kind='train') test_images, test_labels = mnist_reader.load_mnist('data/fashion', kind='t10k') class_names = ['T-shirt/top', 'Trouser', 'Pullover', 'Dress', 'Coat', 'Sandal', 'Shirt', 'Sneaker', 'Bag', 'Ankle boot'] print("train_images.shape = {}".format(train_images.shape)) train_images = train_images.reshape(train_images.shape[0], 28, 28, 1) test_images = test_images.reshape(test_images.shape[0], 28, 28, 1) train_images = train_images.astype('float32') test_images = test_images.astype('float32') train_images /= 255 test_images /= 255 # Initialize a tensorflow session with tf.Session() as sess: # Load the protobuf graph with gfile.FastGFile("models/fashion_mnist.h5.pb",'rb') as f: graph_def = tf.GraphDef() graph_def.ParseFromString(f.read()) # Add the graph to the session tf.import_graph_def(graph_def, name='') # Get graph graph = tf.get_default_graph() # Get tensor from graph pred = graph.get_tensor_by_name("output_class/Softmax:0") # Run the session, evaluating our "c" operation from the graph res = sess.run(pred, feed_dict={'input_image_input:0': test_images}) # Print test accuracy pred_index = np.argmax(res[0]) # Print test accuracy print('Predict:', pred_index, ' Label:', class_names[pred_index], 'GT:', test_labels[0]) |

这段代码中前面同样是读取 Fashion MNIST 数据集,与训练代码一样。部分代码说明如下:

读取 pb 模型文件:

1 2 3 4 5 6 | # Load the protobuf graph with gfile.FastGFile("models/fashion_mnist.h5.pb",'rb') as f: graph_def = tf.GraphDef() graph_def.ParseFromString(f.read()) # Add the graph to the session tf.import_graph_def(graph_def, name='') |

获取当前的计算图:

1 2 | # Get graph graph = tf.get_default_graph() |

获取输出的 Tensor:

1 2 | # Get tensor from graph pred = graph.get_tensor_by_name("output_class/Softmax:0") |

可以看到除了之前我们给出的输出 Tensor 名称 output_class/Softmax 外,我们还需要加上一个索引 :0。关于这一问题的解释可以参见 [12]。我们这里简单来理解,"output_class/Softmax" 是指定了一个 Operation 的名字,对应最后 Softmax 层,大部分层的输出都是一个 Tensor,不过也有可能一个层产生多个输出 Tensor,因此我们这里需要指定是哪个输出。通常对于一个输出的时候就是用 :0 指定,对于 Input 也是同理。

执行计算图并打印输出结果,其中 feed_dict={'input_image_input:0': test_images} 将 test_images 作为输入传入网络:

1 2 3 4 5 6 7 8 | # Run the session, evaluating our "c" operation from the graph res = sess.run(pred, feed_dict={'input_image_input:0': test_images}) # Print test accuracy pred_index = np.argmax(res[0]) # Print test accuracy print('Predict:', pred_index, ' Label:', class_names[pred_index], 'GT:', test_labels[0]) |

执行整个代码:

1 | python3 load_predict.py |

如果运行没有问题则可以看到如下结果:

1 | Predict: 9 Label: Ankle boot GT: 9 |

与之前我们使用 Keras 的 predict 接口结果对比,是一样的,说明我们转换后的模型无误。

关于 Keras 转换成 Tensorflow 模型和预测的步骤就到这里。完整示例可以参见:

https://github.com/skylook/tensorflow_cpp

常见问题

1、错误: This could mean that the variable was uninitialized.

1 2 | FailedPreconditionError (see above for traceback): Error while reading resource variable training/Adam/Variable from Container: localhost. This could mean that the variable was uninitialized. Not found: Resource localhost/training/Adam/Variable/N10tensorflow3VarE does not exist. [[Node: training/Adam/Variable/Read/ReadVariableOp = ReadVariableOp[_class=["loc:@training/Adam/Variable"], dtype=DT_FLOAT, _device="/job:localhost/replica:0/task:0/device:GPU:0"](training/Adam/Variable)]] |

这通常是由于训练模型后直接进行保存的缘故。解决方法是重新声明一个 model 类型加载 keras 模型与参数。

2、错误:OSError: Unable to open file (unable to lock file, errno = 37, error message = '

No locks available')

如果你使用 Keras 保存模型到 h5 时遇到如下问题:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | Traceback (most recent call last): File "keras_to_tensorflow.py", line 114, in <module> net_model = load_model(weight_file_path) File "/usr/local/lib/python3.5/site-packages/keras/engine/saving.py", line 24$ , in load_model f = h5py.File(filepath, mode='r') File "/usr/local/lib/python3.5/site-packages/h5py/_hl/files.py", line 312, in __init__ fid = make_fid(name, mode, userblock_size, fapl, swmr=swmr) File "/usr/local/lib/python3.5/site-packages/h5py/_hl/files.py", line 142, in make_fid fid = h5f.open(name, flags, fapl=fapl) File "h5py/_objects.pyx", line 54, in h5py._objects.with_phil.wrapper File "h5py/_objects.pyx", line 55, in h5py._objects.with_phil.wrapper File "h5py/h5f.pyx", line 78, in h5py.h5f.open OSError: Unable to open file (unable to lock file, errno = 37, error message = ' No locks available') |

这一问题通常是由于在 NFS 文件系统中的问题,建议在命令行配置系统变量或者假如 ~/.bashrc 文件:

1 | export HDF5_USE_FILE_LOCKING=FALSE |

3、错误:Tensor names must be of the form "

如果你的代码遇到如下错误:

1 | ValueError: The name 'output_class/Softmax' refers to an Operation, not a Tensor. Tensor names must be of the form "<op_name>:<output_index>" |

解决方法是在你调用 Tensor 时加入索引,例如 :0。详情请见 [12]。

参考文献

[1] https://blog.keras.io/keras-as-a-simplified-interface-to-tensorflow-tutorial.html

[2] https://github.com/amir-abdi/keras_to_tensorflow

[3] https://towardsdatascience.com/freezing-a-keras-model-c2e26cb84a38

[4] https://medium.com/@brianalois/simple-keras-trained-model-export-for-tensorflow-serving-23fa5dfeeecc

[5] https://blog.metaflow.fr/tensorflow-how-to-freeze-a-model-and-serve-it-with-a-python-api-d4f3596b3adc

[6] https://github.com/BerkeleyGW/hdf5_bug

[7] https://github.com/h5py/h5py/issues/1082#issuecomment-414291188

[8] https://github.com/amir-abdi/keras_to_tensorflow

[9] https://www.tensorflow.org/tutorials/keras/save_and_restore_models

[10] https://github.com/tensorflow/tensorflow/blob/master/tensorflow/tools/graph_transforms/README.md#quantize_weights

[11] https://github.com/zalandoresearch/fashion-mnist/blob/master/README.zh-CN.md

[12] https://stackoverflow.com/questions/37849322/how-to-understand-the-term-tensor-in-tensorflow/37870634#37870634

你好,能直接给计算图的输入层传入数据或者张量吗?